Artificial Intelligence as a new phenomenon brings opportunities and challenges to societies. Societies that can reduce AI risks and take advantage of opportunities can employ this technological phenomenon successfully and safely. In this process, governments are responsible for adopting necessary regulations to control potential AI threats.

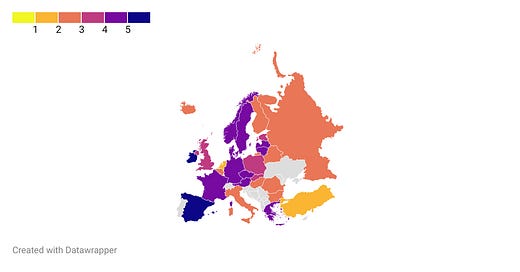

Responsible AI Index monitors and informs government regulations that intend to prevent people from AI threats. The index attempts to show where a safer AI ecosystem exists according to current legislation. Regulations to prevent AI threats are the main measuring component to rank countries in the index.

Summary on Europe

Firstly, the EU AI Act plays a significant role in shaping a responsible AI ecosystem in which developers build more human-centric artificial intelligence. The Act imposes obligations regarding human control and selective access to high-risk AI systems. However, not all countries on the continent have implemented the EU AI Act as the document will become mandatory in 2026. It is reflected on the map with significant distinctions among countries that have implemented the Act more or less.

Secondly, the General Data Protection Regulation has a positive impact on securing personal data from exploitation and misuse. Similarly, non-EU countries have also adopted data protection laws protecting personal data and fostering a safe environment in the continent in this matter.

Thirdly, AI militarization is trending into three dimensions. First-group countries are actively working to improve their capacities with AI or autonomous weapons without any restrictions while the second group advocates using artificial intelligence in the military sector according to some regulative frameworks. The last group does not explicit their intention but coordinates their military plans with NATO, Russia, and similar actors.

Fourthly, countries in the continent still need legislation that mandates algorithmic transparency of AI systems in the public sector. In light of increasing migration and the rise of right-wing parties, algorithmic transparency is necessary to restrict discriminatory electronic platforms.

Finally, Ireland is the leading country where AI systems become more responsible and human-centric under state legislation. The role of Ireland in AI militarization appears minimal according to the current research. On the other hand, the Netherlands and Belgium hesitate to adopt strategic laws that impose human control over AI and access selection to advanced academic AI systems.

Methodology, Ranking and Sources

AI Risks

1. Uncontrollable AI. Uncontrolled robots pose a risk to human safety through their erratic actions. Governments should enact legislation requiring tech-working units to refrain from totally excluding human participation in AI systems at this point in the development of AI. This principle requires businesses and organizations developing AI programs to have a backup plan in place for when the system becomes uncontrollable.

Question 1: Does the country's legislation require AI developers to maintain human control over the AI system they have developed?

2. Academic AI. Individual groups can carry out research on weapons of mass destruction that could prove hazardous to communities by using AI systems for academic reasons. It increases the availability of chemical and biological weapons and facilitates access to their development processes. Several nuclear-armed powers can reveal the secrets of their missiles and nuclear weapons to other nations. AI systems with strong academic research potential have the ability to not only incite a state arms race but also transfer these techniques to non-state actors and threaten the current system of regulating WMDs.

One way to monitor the use of artificial intelligence is through state legislation that limits uncertified access to sophisticated academic AI systems. It might keep the world from devolving into a war involving weapons of mass destruction.

Question 2: Does the country impose restrictions on access to advanced academic AI systems from uncertified sources?

3. Social Scoring. Governments may use discriminatory algorithms on their electronic platforms that target social minorities and support apartheid and segregation. Second, AI systems might be used to assess citizens based on how faithful they are to the rules and limit their basic rights. Third, those who have not yet committed a crime but have the capacity to do so are penalized by social scoring systems that use AI algorithms. People's freedom thus gets restricted and their fundamental rights continue to be threatened.

To mitigate the case, the community must have access to AI algorithms utilized in the public sector. Furthermore, it must be possible for independent specialists to frequently study and evaluate AI algorithms.

Question 3: Are the algorithms of national AI projects open for public discussion in the country?

4. Manipulative AI. Deepfakes and chatbots with either unreliable data or deliberately accumulated data sources are examples of systems that can be exploited to sway public opinion. An AI chatbot may provide deliberate and restricted responses if the data source is subject to stringent regulations, but it may also generate inaccurate information from an abundance of internet-based data. Both situations have the potential to manipulate the public in the absence of legislation that simultaneously protects freedom of speech and identifies reliable data sources.

Question 4: Does country regulation mandate the use of reliable data sources for AI model training?

5. Data Exploitation. Since data is a fundamental component of artificial intelligence, developers need more data in order to train their models. It can occasionally lead to the misuse of personal information. Governments should enact laws pertaining to the protection and security of personal data and prohibit the use of data for AI model training without consent to hold AI developers more accountable.

Question 5: Does country legislation protect personal data from misuse?

6. AI Militarization. Big data analysis and automated targeting are two uses of military AI. Some nations now prioritize developing lethal autonomous weaponry that improves targeting precision and allows them to utilize smaller weapons to destroy enemy warehouses and vital infrastructure. Nonetheless, the race for AI weapons feeds states' aspirations for armaments and helps to rebalance the existing power structure.

Question 6: Does the country have plans to develop AI (automated weapons) as part of its military strategy?

Ireland

Spain

Germany

Greece

Czech Republic

France

Sweden

Norway

Denmark

Austria

Latvia

Lithuania

Estonia

United Kingdom

Poland

Slovakia

Finland

Iceland

Russia

Italy

Belgium

Hungary

Romania

Bulgaria

Belarus

The Netherlands

Turkiye